The worlds of telephony and artificial intelligence are converging to create powerful new communication experiences. AI phone agents—intelligent systems capable of answering calls, understanding natural language, and providing personalized assistance—are transforming how businesses handle voice communication. As a developer, you can now build sophisticated AI phone agents that integrate seamlessly with existing telephone infrastructure while leveraging state-of-the-art AI capabilities.

In this guide, we'll explore how to build AI phone agents using VideoSDK's real-time communication platform, SIP integration, and AI services. You'll learn how to create systems that can answer regular phone calls, understand spoken requests, and respond naturally—bridging the gap between traditional telephony and modern AI.

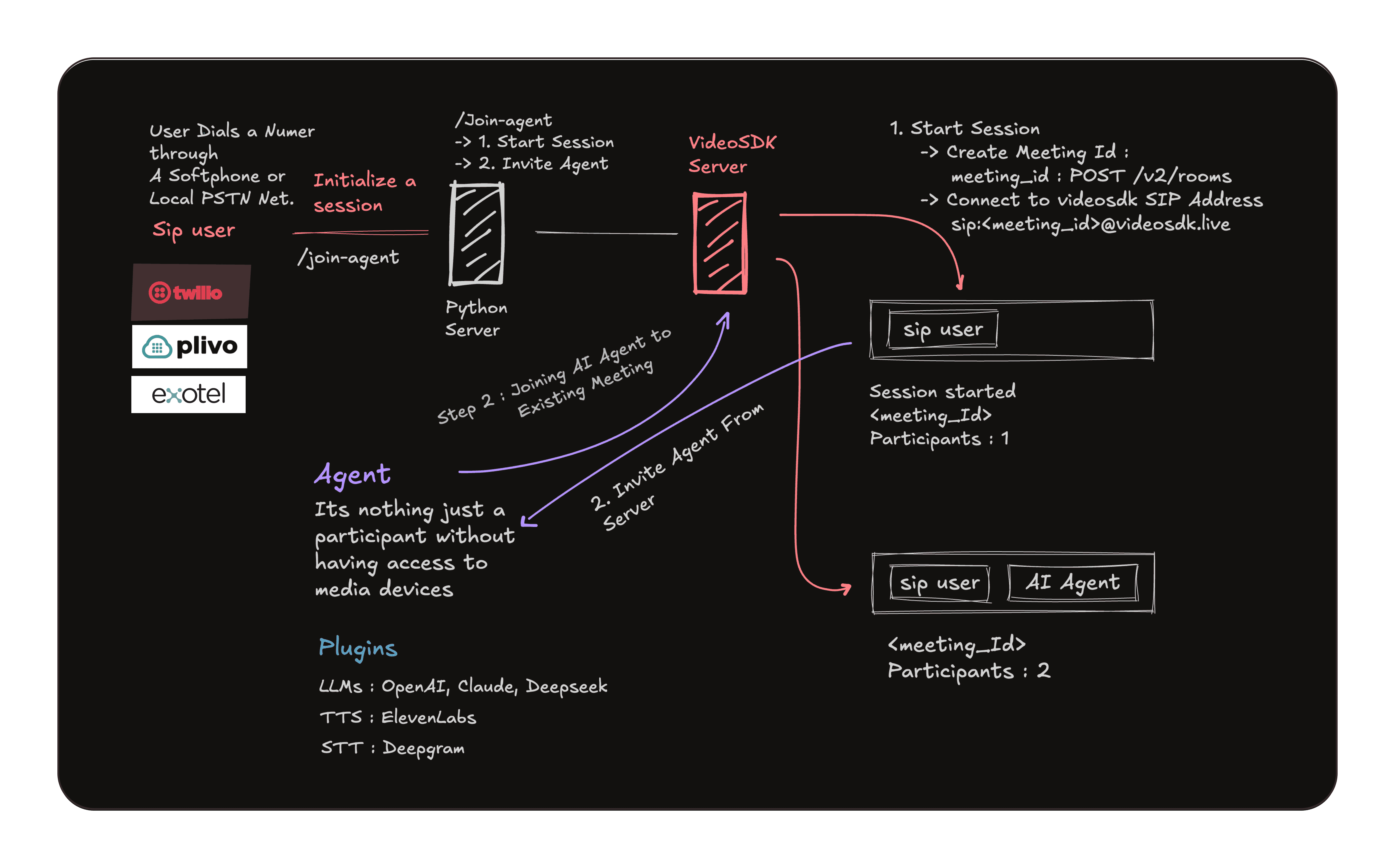

Understanding AI Phone Agent Architecture

AI phone agents combine several technologies to create a seamless experience:

- Telephony Integration: Using SIP (Session Initiation Protocol) to connect traditional phone systems to digital platforms

- Real-time Communication: Managing audio streams and connections between callers and virtual agents

- Speech Recognition: Converting spoken words to text for processing

- Natural Language Understanding: Determining caller intent and extracting relevant information

- Dialogue Management: Maintaining conversation context and flow

- Text-to-Speech: Converting the agent's text responses back to natural-sounding speech

The architecture shown in the diagram illustrates how these components work together:

- A user calls a phone number through a softphone app or traditional phone system

- The call is routed to your server via a SIP provider like Twilio

- Your server creates a VideoSDK meeting and generates instructions to connect the caller

- The AI agent joins the same VideoSDK meeting to interact with the caller

- Audio streams between the caller and AI are processed in real-time

Let's break down how to implement this architecture in code.

Setting Up Your VideoSDK AI Phone Agent

1. Create a FastAPI Server with SIP Integration

First, we'll create a FastAPI server that handles incoming calls from Twilio and connects them to VideoSDK:

1from fastapi.middleware.cors import CORSMiddleware

2from fastapi import Request, Response, HTTPException, FastAPI

3from twilio.twiml.voice_response import VoiceResponse

4from twilio.request_validator import RequestValidator

5import httpx

6import asyncio

7import logging

8import os

9from dotenv import load_dotenv

10

11# Load our VideoSDK agent implementation

12from agent import VideoSDKAgent

13

14# Load environment variables

15load_dotenv()

16

17# Set up logging

18logging.basicConfig(level=logging.INFO, format='%(asctime)s - %(levelname)s - %(message)s')

19logger = logging.getLogger(__name__)

20

21# Get credentials from environment variables

22VIDEOSDK_SIP_USERNAME = os.getenv("VIDEOSDK_SIP_USERNAME")

23VIDEOSDK_SIP_PASSWORD = os.getenv("VIDEOSDK_SIP_PASSWORD")

24VIDEOSDK_AUTH_TOKEN = os.getenv("VIDEOSDK_AUTH_TOKEN")

25TWILIO_AUTH_TOKEN = os.getenv("TWILIO_AUTH_TOKEN")

26

27# Initialize Twilio validator and FastAPI

28validator = RequestValidator(TWILIO_AUTH_TOKEN)

29app = FastAPI()

30

31# Enable CORS

32app.add_middleware(

33 CORSMiddleware,

34 allow_origins=["*"],

35 allow_credentials=True,

36 allow_methods=["*"],

37 allow_headers=["*"],

38)

39

40# Store active agents

41active_agents = {}

422. Implement the VideoSDK Room Creation Function

Next, we need a function to create VideoSDK rooms for our calls:

1async def create_videosdk_room() -> str:

2 """

3 Calls the VideoSDK API to create a new room.

4 Returns the roomId if successful, raises HTTPException otherwise.

5 """

6 if not VIDEOSDK_AUTH_TOKEN:

7 logging.error("VideoSDK Auth Token is not configured.")

8 raise HTTPException(status_code=500, detail="Server configuration error [VSDK Token]")

9

10 headers = {"Authorization": VIDEOSDK_AUTH_TOKEN}

11

12 async with httpx.AsyncClient() as client:

13 try:

14 logging.info(f"Attempting to create VideoSDK room")

15 response = await client.post("https://api.videosdk.live/v2/rooms", headers=headers, timeout=10.0)

16 response.raise_for_status()

17

18 response_data = response.json()

19 room_id = response_data.get("roomId")

20

21 if not room_id:

22 logging.error(f"VideoSDK response missing 'roomId'. Response: {response_data}")

23 raise HTTPException(status_code=500, detail="Failed to get roomId from VideoSDK")

24

25 logging.info(f"Successfully created VideoSDK room: {room_id}")

26 return room_id

27

28 except Exception as exc:

29 logging.error(f"Error creating VideoSDK room: {exc}", exc_info=True)

30 raise HTTPException(status_code=500, detail=f"Internal server error: {exc}")

313. Create the Endpoint to Handle Phone Calls

The core of our system is the webhook endpoint that Twilio will call when a call comes in:

1@app.post("/join-agent", response_class=Response)

2async def handle_twilio_call(request: Request):

3 """

4 Handles incoming Twilio call webhook.

5 Creates a VideoSDK room and returns TwiML to connect the call via SIP.

6 """

7 # Validate Twilio request

8 form_data = await request.form()

9 twilio_signature = request.headers.get('X-Twilio-Signature', None)

10 url = str(request.url)

11

12 if not TWILIO_AUTH_TOKEN or not validator.validate(url, form_data, twilio_signature):

13 logging.warning("Twilio request validation failed.")

14 raise HTTPException(status_code=403, detail="Invalid Twilio Signature")

15

16 logging.info("Received valid request on /join-agent")

17

18 try:

19 # Step 1: Create VideoSDK Room

20 room_id = await create_videosdk_room()

21 agent_token = VIDEOSDK_AUTH_TOKEN

22

23 # Step 2: Instantiate and Connect Agent (in background)

24 if room_id and agent_token:

25 logger.info(f"Initializing and connecting agent for room {room_id}")

26 agent = VideoSDKAgent(room_id=room_id, videosdk_token=agent_token, agent_name="AI Phone Assistant")

27 active_agents[room_id] = agent

28

29 # Connect agent in background

30 asyncio.create_task(agent.connect())

31 logger.info(f"Agent connection task created for room {room_id}")

32

33 # Step 3: Generate TwiML to connect to VideoSDK SIP

34 sip_uri = f"sip:{room_id}@sip.videosdk.live"

35 logging.info(f"Connecting caller to SIP URI: {sip_uri}")

36

37 response = VoiceResponse()

38 response.say("Thank you for calling. Connecting you to our AI assistant now.")

39

40 dial = response.dial(caller_id=None)

41 dial.sip(sip_uri, username=VIDEOSDK_SIP_USERNAME, password=VIDEOSDK_SIP_PASSWORD)

42

43 return Response(content=str(response), media_type="application/xml")

44

45 except HTTPException as http_exc:

46 # If room creation fails, inform the caller and hang up

47 response = VoiceResponse()

48 response.say("Sorry, we encountered an error connecting you. Please try again later.")

49 response.hangup()

50 logging.warning(f"Returning error TwiML: {http_exc.detail}")

51 return Response(content=str(response), media_type="application/xml")

524. Implement the VideoSDK Agent Class

Now, let's look at our VideoSDKAgent class that handles the AI side of the conversation:

1class VideoSDKAgent:

2 """Represents an AI Agent connected to a VideoSDK meeting."""

3

4 def __init__(self, room_id: str, videosdk_token: str, agent_name: str = "AI Assistant"):

5 self.room_id = room_id

6 self.videosdk_token = videosdk_token

7 self.agent_name = agent_name

8 self.meeting = None

9 self.is_connected = False

10 self.participant_handlers = {} # Store participant handlers for cleanup

11

12 logger.info(f"[{self.agent_name}] Initializing for Room ID: {self.room_id}")

13 self._initialize_meeting()

14

15 def _initialize_meeting(self):

16 """Sets up the Meeting object using VideoSDK.init_meeting."""

17 try:

18 # Configure the agent's meeting settings

19 meeting_config = {

20 "meeting_id": self.room_id,

21 "token": self.videosdk_token,

22 "name": self.agent_name,

23 "mic_enabled": False, # Agent doesn't speak initially

24 "webcam_enabled": False, # Agent has no camera

25 "auto_consume": True, # Automatically receive streams from others

26 # You can add a custom audio track here for AI responses

27 # "custom_microphone_audio_track": custom_audio_track,

28 }

29

30 self.meeting = VideoSDK.init_meeting(**meeting_config)

31

32 # Attach event handlers

33 meeting_event_handler = AgentMeetingEventHandler(self.agent_name, self)

34 self.meeting.add_event_listener(meeting_event_handler)

35

36 logger.info(f"[{self.agent_name}] Meeting object initialized.")

37

38 except Exception as e:

39 logger.exception(f"[{self.agent_name}] Failed to initialize VideoSDK Meeting: {e}")

40 self.meeting = None # Ensure meeting is None if init fails

41

42 async def connect(self):

43 """Connects the agent to the meeting asynchronously."""

44 if not self.meeting:

45 logger.error(f"[{self.agent_name}] Cannot connect, meeting not initialized.")

46 return

47

48 if self.is_connected:

49 logger.warning(f"[{self.agent_name}] Already connected or connecting.")

50 return

51

52 logger.info(f"[{self.agent_name}] Attempting to join meeting...")

53 try:

54 await self.meeting.async_join()

55 self.is_connected = True

56 logger.info(f"[{self.agent_name}] async_join call completed.")

57 except Exception as e:

58 logger.exception(f"[{self.agent_name}] Error during async_join: {e}")

59 self.is_connected = False

605. Handle Meeting and Participant Events

To process audio from callers, we need to implement event handlers for meeting events:

1class AgentParticipantEventHandler(ParticipantEventHandler):

2 """Handles events specific to a participant within the meeting."""

3 def __init__(self, agent_name: str, participant: Participant):

4 self.agent_name = agent_name

5 self.participant = participant

6 logger.info(f"[{self.agent_name}] ParticipantEventHandler initialized.")

7

8 def on_stream_enabled(self, stream: Stream) -> None:

9 """Handle Participant stream enabled event."""

10 logger.info(

11 f"[{self.agent_name}] Stream ENABLED: Kind='{stream.kind}', "

12 f"ParticipantName='{self.participant.display_name}'"

13 )

14 # Process audio stream

15 if stream.kind == "audio" and not self.participant.local:

16 logger.info(f"[{self.agent_name}] Received AUDIO stream from {self.participant.display_name}")

17 # Here you would process the audio stream with STT

18 # Example: asyncio.create_task(process_audio_stream(stream))

19

20 def on_stream_disabled(self, stream: Stream) -> None:

21 """Handle Participant stream disabled event."""

22 if stream.kind == "audio" and not self.participant.local:

23 logger.info(f"[{self.agent_name}] Stopped receiving AUDIO stream")

24 # Stop audio processing

25

26class AgentMeetingEventHandler(MeetingEventHandler):

27 """Handles meeting-level events for the Agent."""

28 def __init__(self, agent_name: str, agent_instance: 'VideoSDKAgent'):

29 self.agent_name = agent_name

30 self.agent_instance = agent_instance # Reference to the agent itself

31 logger.info(f"[{self.agent_name}] MeetingEventHandler initialized.")

32

33 def on_meeting_joined(self, data) -> None:

34 logger.info(f"[{self.agent_name}] Successfully JOINED meeting.")

35

36 def on_meeting_left(self, data) -> None:

37 logger.warning(f"[{self.agent_name}] LEFT meeting.")

38 self.agent_instance.is_connected = False

39

40 def on_participant_joined(self, participant: Participant) -> None:

41 logger.info(

42 f"[{self.agent_name}] Participant JOINED: Name='{participant.display_name}'"

43 )

44 # Add event listener for this specific participant's streams

45 participant_event_handler = AgentParticipantEventHandler(self.agent_name, participant)

46 participant.add_event_listener(participant_event_handler)

47 self.agent_instance.participant_handlers[participant.id] = participant_event_handler

48

49 def on_participant_left(self, participant: Participant) -> None:

50 logger.warning(f"[{self.agent_name}] Participant LEFT: Name='{participant.display_name}'")

51 # Cleanup participant event handler

52 if participant.id in self.agent_instance.participant_handlers:

53 handler = self.agent_instance.participant_handlers.pop(participant.id)

54 participant.remove_event_listener(handler)

55Implementing AI Capabilities

To complete our AI phone agent, we need to add the actual intelligence. Here's how you would extend the code to process audio and generate responses:

1async def process_audio_stream(stream: Stream):

2 """Process audio from the caller, transcribe it, and generate responses."""

3 try:

4 # 1. Receive audio frames from the stream

5 while True:

6 frame = await stream.track.recv()

7 audio_data = frame.to_ndarray()[0]

8

9 # 2. Process audio for optimal quality

10 audio_data_float = (audio_data.astype(np.float32) / np.iinfo(np.int16).max)

11 audio_mono = librosa.to_mono(audio_data_float.T)

12 audio_resampled = librosa.resample(audio_mono, orig_sr=48000, target_sr=16000)

13

14 # 3. Convert to PCM for Deepgram or other STT service

15 pcm_frame = ((audio_resampled * np.iinfo(np.int16).max).astype(np.int16).tobytes())

16

17 # 4. Send to STT service (Deepgram example)

18 text = await transcribe_audio(pcm_frame)

19

20 if text:

21 # 5. Process with NLU and generate response

22 response_text = await generate_response(text)

23

24 # 6. Convert response to speech

25 speech_audio = await text_to_speech(response_text)

26

27 # 7. Send audio back through the agent's audio track

28 await send_audio_response(speech_audio)

29 except Exception as e:

30 logger.exception(f"Error processing audio stream: {e}")

31This completes the technical implementation of our AI phone agent using VideoSDK and SIP integration.

SIP Provider Comparison

To implement AI phone agents, you'll need a SIP provider to connect regular phone calls to your system. Here's a comparison of popular providers:

| Provider | Features | Pricing | Regional Coverage | API Complexity | Best For |

|---|---|---|---|---|---|

| Twilio | Programmable Voice, TwiML, Global Reach | Pay-as-you-go (~$0.0085/min) | Global (100+ countries) | Medium | Developers needing robust documentation and scalability |

| Plivo | Voice API, SMS, WebRTC | Pay-as-you-go (~$0.0065/min) | Global (195+ countries) | Medium | Cost-sensitive applications with global reach |

| Exotel | Voice, SMS, WhatsApp | Monthly plans + usage | India, SE Asia focused | Low | Applications primarily serving Asian markets |

| Vonage | Voice API, WebRTC, Video | Pay-as-you-go (~$0.0085/min) | Global | Medium | Enterprise applications with multiple communication channels |

| Bandwidth | Voice, SMS, E911 | Volume-based pricing | North America focused | Medium | US/Canada applications requiring carrier-grade reliability |

| Telnyx | Voice API, SIP Trunking | Pay-as-you-go (~$0.0060/min) | Global | High | Applications needing advanced control and lower costs |

Real-World Applications

AI phone agents built with VideoSDK can be used for various applications:

1. Customer Support Automation

Create a phone number that automatically answers customer inquiries, handles common questions, and escalates to human agents when necessary.

2. Appointment Scheduling

Build a system that answers calls, understands scheduling requests, checks calendar availability, and confirms appointments.

3. Information Services

Develop phone-based information services for checking account balances, order status, or business hours without requiring customers to use apps or websites.

4. Healthcare Triage

Implement initial patient intake and triage via phone, collecting symptoms and medical history before connecting to healthcare providers.

5. Multilingual Support

Create phone agents that can understand and speak multiple languages, expanding your support capabilities globally without additional staff.

Best Practices for AI Phone Agents

When building AI phone agents with VideoSDK, follow these best practices:

1. Voice Optimization

Ensure your text-to-speech voices sound natural and match your brand. Consider using premium voice services like ElevenLabs for the most natural-sounding responses.

2. Progressive Disclosure

Start with simple, focused interactions rather than overwhelming callers with options. Gradually introduce more complex capabilities as users become comfortable.

3. Fallback Mechanisms

Always implement fallback options for when the AI doesn't understand or can't handle a request. This might include escalation to human agents or alternative contact methods.

4. Conversation Design

Design conversation flows specifically for voice, recognizing that voice interactions differ from text. Consider timing, turn-taking, and natural language patterns.

5. Testing with Real Calls

Test your AI phone agent with real phone calls across different connection qualities, accents, and background noise levels to ensure robustness.

Conclusion

Building AI phone agents with VideoSDK provides a powerful way to bridge traditional telephony with cutting-edge AI. By combining VideoSDK's real-time communication capabilities with SIP integration and modern AI services, developers can create intelligent, conversational experiences accessible through ordinary phone calls.

The architecture and code examples in this guide provide a starting point for building sophisticated AI phone agents that can revolutionize customer interactions, streamline operations, and create new opportunities for voice-based services. Whether you're enhancing customer support, automating appointment scheduling, or creating entirely new voice-based services, VideoSDK's platform gives you the tools to bring your AI phone agent vision to life.

Start building your AI phone agent today, and join the growing community of developers creating the next generation of voice-based AI experiences.

Want to level-up your learning? Subscribe now

Subscribe to our newsletter for more tech based insights

FAQ